db-ip.com

db-ip.com automates IP lookups, offering accurate geolocation for proxy checks, e-commerce targeting, and risk analysis. It’s useful for studying traffic trends and demographics, with data updated through ISP agreements and machine learning. Check out this find IP address details quickly

IPinfo.io

IPinfo.io offers robust IP lookup tools, including traffic tracking, abusive IP reporting, and targeted IP services via well-documented APIs. Free plans include 50,000 lookups, while paid options start at $99/month. Unique features like the IP Summarizer allow users to view traffic maps based on IP lists.

Maxmind

MaxMind, with over 20 years of experience, is trusted for IP geolocation, helping with targeted marketing, content localization, and fraud prevention. While the free version is limited, the paid enterprise packages offer more detailed and frequently updated data, making it a top choice for businesses.

IPHub.info

IPHub.info is known for its accurate database covering over 300 million IPs. It offers up to 1,000 free queries daily, with affordable paid plans for increased usage. API access is available for easy integration, making it a strong choice for users needing large-scale IP lookups.

IPhey.com

IPhey.com delivers detailed IP analysis, including browser, location, and hardware data. It flags suspicious setups, especially from anti-detect browsers, which may lead to inaccurate reports. It’s best suited for users with standard browsing setups.

Whatismyipaddress.com

Whatismyipaddress.com provides simple IP lookups, including location and IP type identification (IPv4 or IPv6). It partners with VPN providers and offers blacklisted IP checks. However, occasional inaccuracies in geolocation can occur due to outdated databases.

Whoer.net

Whoer.net delivers IP data such as location, browser, and proxy detection. It promotes its own VPN services, which raises concerns about data bias. Some users report false positives, especially when identifying proxy ports.

Scamalytics

Scamalytics specializes in fraud prevention by analyzing IPs for malicious activity. It’s particularly useful for e-commerce, banking, and online platforms. However, scoring entire ISPs rather than individual IPs can result in false positives for large networks.

IPQualityScore

IPQualityScore offers risk assessments, fraud detection, and proxy verification tools. It uses a scoring system where a lower score indicates a safer IP. Historical data sometimes causes inaccuracies, making it difficult to change an IP’s score once deemed risky.

Ip2location

Ip2location provides basic IP geolocation for IPv4 and IPv6 addresses. However, its database isn’t frequently updated, leading to potential inaccuracies. Free users are limited to 50 daily queries, making it more suitable for occasional use.

Conclusion

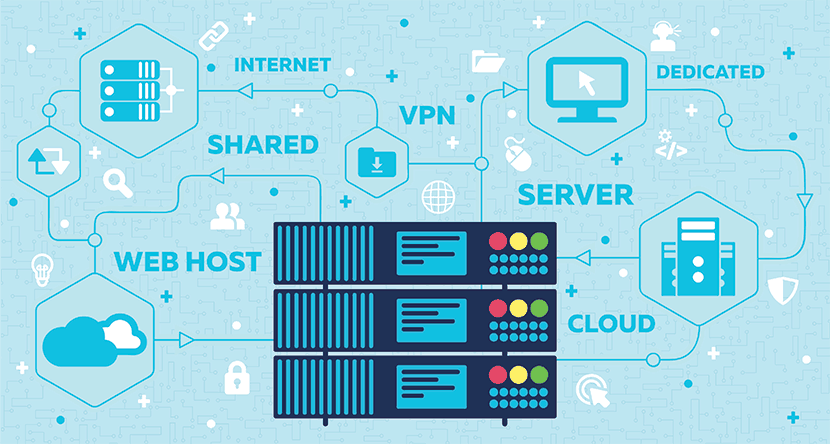

IP lookup services provide valuable tools for monitoring website traffic, ensuring the quality of private proxies, and enhancing security. Whether you’re a website owner or just need to verify proxy details, these services can help streamline your operations. If you have any questions or need further guidance on proxy testing, our experienced account managers are available to assist you with tailored advice and solutions for your specific needs.